Timeline visualization has become indispensable for understanding complex historical patterns, but the real power lies in what happens before you create that first visual element. Historical data analysis forms the analytical backbone that transforms raw information into compelling, accurate timeline representations.

When organizations collect years of performance metrics, customer interactions, or market changes, they often struggle to extract meaningful patterns from this wealth of information. Systematic data analytics history techniques provide structured methods to identify trends, correlations, and significant events that deserve prominent placement on your timeline.

This article explores proven strategies for analyzing historical data to create clear, accurate timelines that communicate complex temporal relationships effectively.

What makes historical data analysis different from standard analytics

Before diving into specific techniques, it's important to understand why historical data requires a different analytical mindset than real-time reporting.

Historical data analysis operates under different constraints and opportunities compared to real-time analytics. While current data analysis focuses on immediate insights and rapid decision-making, history data analysis requires patience, context, and a deeper understanding of how events influence each other across extended periods.

The temporal aspect changes everything. Unlike snapshot analytics, historical analysis must account for:

- seasonal variations that repeat across years;

- long-term trends that emerge only when viewing extended periods;

- external influences that may not be immediately apparent in the data;

- data quality changes that occurred as collection methods evolved.

Consider a retail company analyzing five years of sales data. Standard analytics might show monthly revenue peaks, but historical data analytics reveals how external factors like economic recessions, competitor launches, or supply chain disruptions created lasting impacts that ripple through subsequent periods.

Pattern recognition techniques that work

Now that we understand the unique nature of historical data, let's examine the specific methods that help identify meaningful patterns for timeline construction.

Spotting trends across time periods

The foundation of timeline creation starts with identifying recurring patterns and anomalies in your historical dataset. This process involves several analytical approaches that work together to reveal the story your data tells.

Trend analysis examines long-term movements in your data, helping you identify gradual changes that might span months or years. These trends become the backbone of your timeline, showing progression over time.

Seasonal decomposition separates your data into trend, seasonal, and irregular components. This technique proves particularly valuable when creating timelines that need to highlight underlying patterns while acknowledging predictable fluctuations.

Anomaly detection identifies unusual events or data points that deviate significantly from expected patterns. These anomalies often represent the most important events to highlight on your timeline.

Statistical methods for historical context

Moving beyond basic pattern recognition, statistical analysis provides the mathematical foundation for confident timeline interpretation:

- Correlation analysis reveals relationships between different data series across time.

- Regression analysis quantifies how variables influence each other over extended periods.

- Time series decomposition separates signal from noise in your historical data.

- Confidence intervals help you understand the reliability of your historical insights.

These methods work together to create a comprehensive understanding of your data's historical behavior, providing the analytical foundation needed for accurate timeline construction.

Data cleaning and preparation essentials

Raw historical data rarely arrives ready for analysis. This section covers the preprocessing steps that transform messy datasets into reliable sources for timeline creation.

Handling missing information

Historical datasets frequently contain gaps where data wasn't collected, systems were down, or recording methods changed. Your approach to these gaps significantly impacts timeline accuracy.

Forward filling uses the last known value to fill gaps, appropriate when you expect gradual changes between data points. Interpolation estimates missing values based on surrounding data points, useful when you have reason to believe changes occurred smoothly over time.

Exclusion strategies sometimes prove more appropriate than estimation, particularly when missing data represents genuinely absent events rather than recording failures.

Standardizing time formats and intervals

Historical data often comes from multiple sources with different time recording conventions. Creating coherent timelines requires standardization:

- convert all timestamps to a consistent timezone and format;

- establish uniform intervals (daily, weekly, monthly) based on your analysis goals;

- account for calendar irregularities like leap years or daylight saving time changes;

- align data from different sources to comparable time periods.

Quality assessment and validation

Before incorporating historical data into timeline visualizations, systematic quality assessment prevents misleading representations:

Check for logical consistency by comparing related data series for impossible combinations. Verify temporal accuracy by confirming that events appear in chronologically possible sequences. Assess completeness by identifying systematic gaps that might skew your timeline interpretation.

Advanced analysis methods for complex timelines

With clean, well-prepared data, you can apply more sophisticated analytical techniques that reveal deeper insights for timeline construction.

Comparative timeline analysis

Single timeline analysis provides limited insight compared to comparative approaches that examine multiple data series simultaneously. This method reveals interactions and dependencies that single-series analysis misses.

Parallel timeline analysis displays multiple related data series on synchronized time axes, making correlations and cause-effect relationships visible.

Offset correlation analysis examines how events in one timeline predict changes in another timeline after specific delays.

Cohort analysis groups similar entities (customers, products, regions) and compares their historical patterns, revealing which factors contribute to different outcomes over time.

Using historical patterns to predict future events

While historical data analysis focuses on past events, it provides the foundation for understanding likely future patterns. This predictive component adds value to timeline interpretation.

Cycle identification reveals recurring patterns that help predict future timeline developments. Trend extrapolation uses historical trajectories to estimate continuation patterns, though this requires careful consideration of changing conditions.

Leading indicator analysis identifies early signals within your historical data that preceded significant events, helping you recognize similar patterns as they develop.

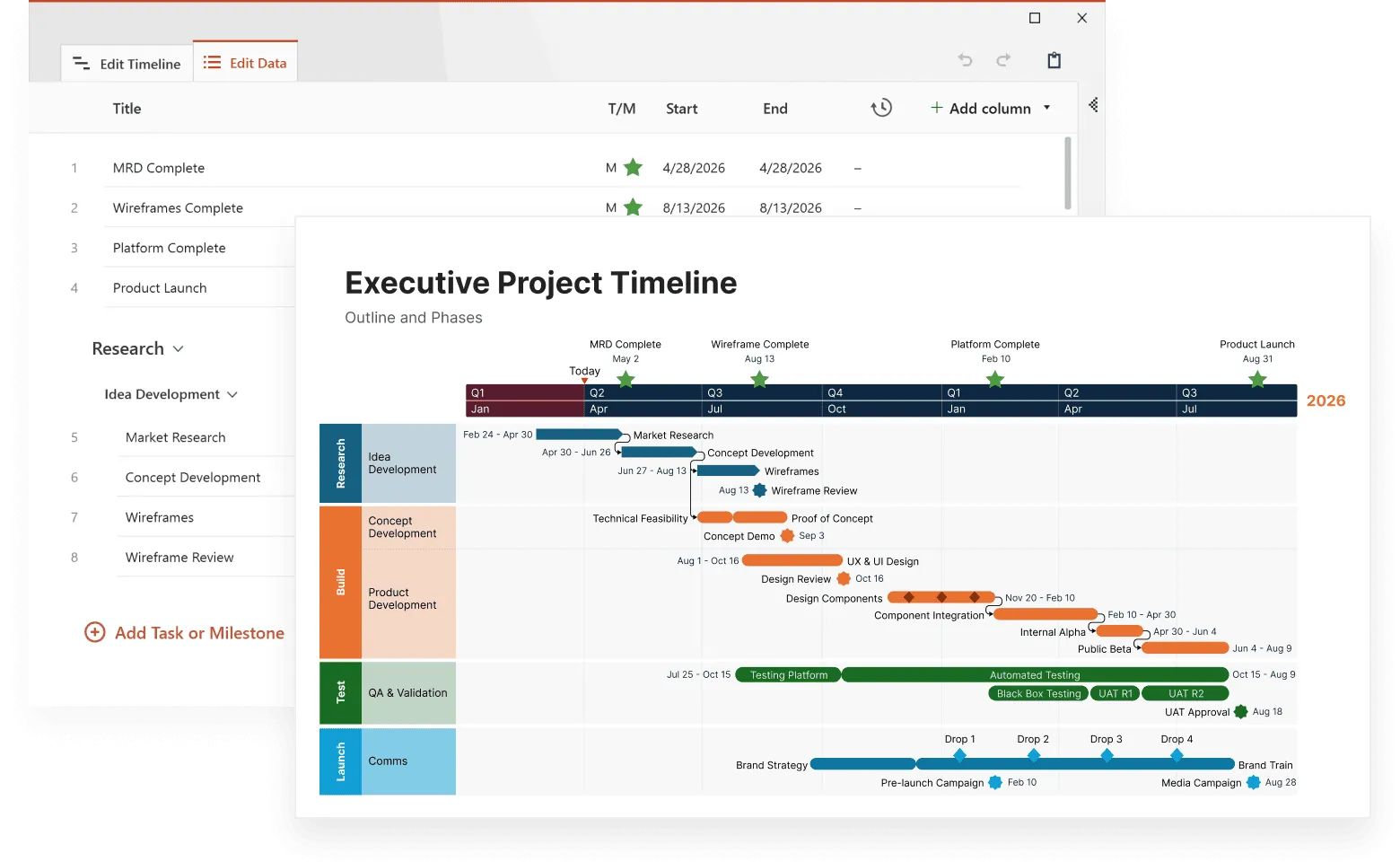

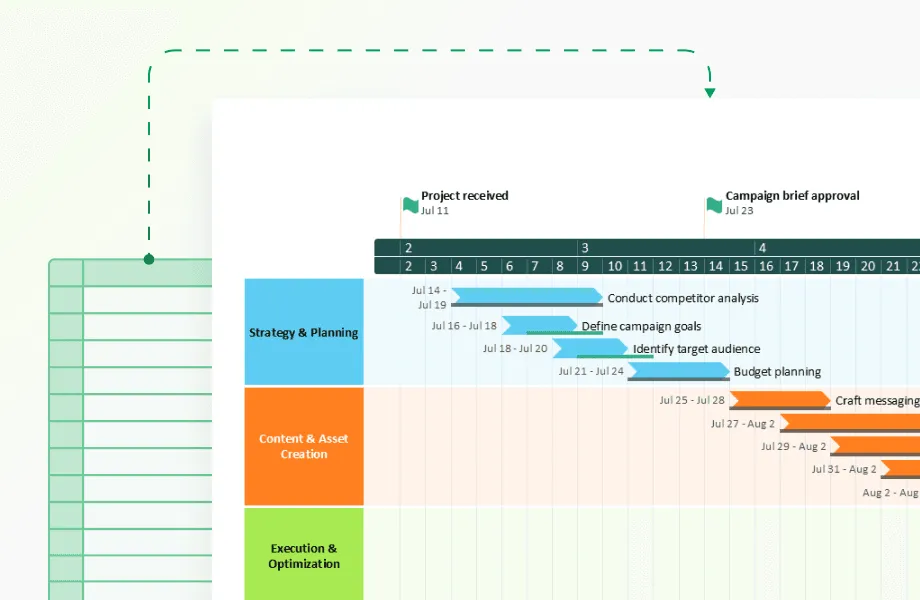

Bring clarity to past trends

Try Office Timeline for free. Create professional timeline visuals that make historical data easier to analyze and discuss.

Choosing the right tools for your analysis

The software and systems you choose can make or break your historical data analysis project. Here's what works best for different situations.

Software solutions for historical analysis

Modern historical data analytics requires tools capable of handling large datasets while providing sophisticated analytical capabilities.

Statistical software packages like R and Python offer comprehensive libraries for time series analysis, pattern recognition, and advanced statistical modeling. These tools excel at complex analytical tasks but require technical expertise.

Business intelligence platforms provide user-friendly interfaces for historical analysis while maintaining analytical rigor. These solutions often include built-in timeline visualization capabilities.

Specialized timeline software focuses specifically on historical data presentation, offering templates and features designed for timeline creation while incorporating analytical tools.

Database and storage considerations

Historical data analysis demands robust data storage and retrieval capabilities that can handle both volume and complexity.

Time series databases optimize storage and querying for temporal data, providing better performance for historical analysis compared to traditional relational databases.

Data warehousing solutions organize historical data for analytical access while maintaining data integrity and providing audit trails for data quality assurance.

Cloud-based platforms offer scalable storage and processing power for large historical datasets, enabling analysis of extensive time periods without local hardware limitations.

Real-world applications

Understanding how different industries apply these techniques helps illustrate the practical value of historical data analysis for timeline creation.

Healthcare timeline analysis

Medical organizations use historical data analytics to track patient outcomes, treatment effectiveness, and disease progression patterns. Timeline analysis reveals how treatments perform over extended periods and identifies factors that influence patient recovery trajectories.

Emergency response planning benefits from historical analysis of incident patterns, resource utilization, and response times. These insights inform staffing decisions and resource allocation strategies.

Financial services applications

Investment firms analyze historical market data to identify patterns that inform portfolio management decisions. Timeline analysis reveals how different asset classes perform under various economic conditions and helps identify optimal investment timing.

Risk management departments use historical data analysis to understand how various risk factors evolved during past market events, informing current risk assessment procedures.

Manufacturing and operations

Production facilities analyze historical performance data to identify optimization opportunities and predict maintenance requirements. Timeline analysis reveals how different operational changes impacted productivity and quality metrics.

Supply chain management uses historical data analytics to understand supplier performance patterns, seasonal demand variations, and logistics optimization opportunities.

Overcoming common analysis challenges

Every historical data analysis project encounters predictable obstacles. Here's how to handle the most common problems that can derail timeline accuracy.

Managing large datasets efficiently

Historical datasets often contain millions of data points spanning years or decades. Processing this volume requires strategic approaches that balance thoroughness with practical constraints.

Sampling strategies reduce computational requirements while maintaining analytical validity. Data aggregation combines detailed data into meaningful summary statistics appropriate for timeline visualization.

Parallel processing techniques distribute analytical workloads across multiple computing resources, reducing analysis time for large datasets.

Ensuring interpretation accuracy

Historical data analysis produces insights that influence important decisions, making accuracy paramount throughout the analytical process.

Multiple validation approaches confirm findings through different analytical methods. Expert review processes incorporate domain knowledge to verify that analytical findings align with known historical context.

Sensitivity analysis examines how changes in analytical assumptions affect conclusions, helping identify robust insights versus fragile findings.

Simplifying complex visualizations

Converting complex historical analysis into clear, understandable timelines presents ongoing challenges that require thoughtful design decisions.

Progressive disclosure techniques present overview information first, allowing users to explore detailed data as needed. Interactive elements enable users to adjust time scales, filter data series, and explore specific time periods.

Multiple perspective options provide different views of the same historical data, accommodating various user needs and analytical questions.

Best practices for accurate timeline interpretation

Success in historical data analysis requires following proven practices that prevent common mistakes and improve reliability.

Context integration strategies

Historical data gains meaning through context that places analytical findings within broader circumstances that influenced the recorded events.

Research external factors that influenced your data during specific time periods. Economic conditions, regulatory changes, competitive actions, and technological developments all impact historical patterns.

Document assumptions and limitations inherent in your analytical approach. Clear documentation helps users understand the scope and reliability of your timeline interpretations.

Validation procedures that work

Systematic validation prevents misinterpretation of historical patterns and builds confidence in your timeline accuracy.

- cross-reference findings with known historical events to verify timeline accuracy;

- test analytical methods on data periods where outcomes are known;

- seek feedback from domain experts familiar with the historical context;

- compare results across different analytical approaches to identify consistent findings.

Communication strategies for stakeholders

Your analysis is only valuable if others can understand and act on your findings. Clear communication bridges the gap between complex analysis and practical application.

Present uncertainty ranges alongside point estimates to convey analytical confidence levels. Explain methodology decisions that significantly impact interpretation. Provide context for users unfamiliar with historical background affecting your data.

Historical data analysis transforms timeline creation from simple chronological listing into meaningful narrative construction. By applying systematic analytical techniques to historical datasets, you can identify significant patterns, validate timeline accuracy, and create visualizations that communicate complex temporal relationships.

The techniques and approaches outlined here provide a framework for extracting maximum value from historical data while avoiding common pitfalls that lead to misleading timeline interpretations. Success requires combining analytical rigor with domain expertise and clear communication of both findings and limitations.

As data collection capabilities continue expanding and analytical tools become more sophisticated, historical data analysis will become increasingly powerful for timeline creation and interpretation. Organizations that master these techniques will gain significant advantages in understanding their past performance and making informed decisions about future directions.

Conclusion

Strong timelines start with strong data, and historical analysis is what gives them real impact. By cleaning your datasets, spotting long-term patterns, and validating what the numbers are actually saying, you can create timelines that feel sharp, reliable, and easy to explore.

The techniques covered in this article help you cut through the noise and turn years of information into a clear, engaging visual story. With thoughtful analysis and the right tools, your timelines can highlight what truly mattered, and help you spot smarter opportunities moving forward.

Frequently asked questions

These questions address common challenges people face when starting their historical data analysis projects for timeline creation.

You need at least two complete cycles of your pattern to identify trends reliably. The specific timeframe depends on what you're analyzing. For seasonal business patterns, collect 2-3 years of data. For economic cycles or long-term trends, aim for 5-10 years. Product lifecycle analysis might only need 18-24 months if you're tracking rapid changes. Start with whatever data you have - even one year can reveal useful patterns - then expand your dataset as you identify what timeframes matter most for your specific use case.

Document everything first, then choose your strategy based on gap size and context. Small gaps (a few days or weeks) can often be filled using interpolation if you expect gradual changes, or forward-filling if conditions likely remained stable. Large gaps require different approaches:

- For missing quarters: analyze available periods separately rather than estimate.

- For system changes: treat pre and post-change data as separate datasets.

- For obvious errors: exclude rather than correct unless you can verify the actual values.

Never guess at missing data points that could significantly alter your timeline interpretation.

Start by mapping all data to a common time structure before any analysis. Create a master timeline with consistent intervals (daily, weekly, monthly) and align all sources to these periods. Pay special attention to:

- time zones and daylight saving changes;

- different fiscal years or reporting periods;

- varying data collection frequencies.

Use the lowest common denominator for time intervals. If one source provides monthly data and another provides daily, aggregate the daily data to monthly for comparison. Keep original granular data separate for detailed analysis of specific periods.

Validate your findings against known historical events and use multiple analytical approaches.

Cross-reference your timeline patterns with documented external events during the same periods. If your sales data shows a dip in Q2 2020 and you know there was a major disruption that quarter, your analysis gains credibility. Use these validation steps:

- Test your analytical method on periods where you know the outcomes.

- Have colleagues review your interpretation for obvious logical gaps.

- Apply different analytical techniques to the same data and compare results.

- Set confidence levels for your findings and communicate uncertainty ranges.

Remember that correlation doesn't equal causation. Always consider external factors that might explain the patterns you observe.